Abstract

We derive computationally simple and intuitive score tests of neglected serial correlation in unobserved component univariate models using frequency domain techniques. In some common situations in which the alternative model information matrix is singular under the null, we derive one-sided extremum tests, which are asymptotically equivalent to likelihood ratio tests, and explain how to compute reliable Wald tests. We also explicitly relate the incidence of those problems to the model identification conditions and compare our tests with tests based on the reduced form prediction errors. Our Monte Carlo exercises assess the finite sample reliability and power of our proposed tests.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The superposition of Arima time series models forms the basis of two dominant approaches to the classical decomposition of a univariate time series into trend, cyclical, seasonal and irregular components: the reduced form “model-based” decomposition analysed by Box et al. (1978) and Pierce (1978) and further extended by Agustín Maravall and his co-authors, and the so-called “structural time series” models studied by Nerlove (1967), Engle (1978) and Nerlove et al. (1979) and subsequently developed by Andrew Harvey and his co-authors.

In both cases, the model parameters are estimated by maximising the Gaussian log-likelihood function of the observed data, which can be readily obtained either as a by-product of the Kalman filter prediction equations or from Whittle’s (1962) frequency domain asymptotic approximation. Once the parameters have been estimated, filtered values of the unobserved components can be extracted by means of the Kalman smoother or its Wiener–Kolmogorov counterpart. These estimation and filtering issues are well understood (see Harvey 1989; Durbin and Koopman 2012 for textbook treatments), and the same can be said of their efficient numerical implementation (see Commandeur et al. 2011 and the references therein).

In contrast, specification tests for these models are far less known. While sophisticated users will often look at several diagnostics, such as the ones suggested by Maravall (1987, 1999, 2003), or the ones computed by the Stamp software package following Harvey and Koopman (1992) (see Koopman et al. 2009 for further details), formal tests are hardly ever reported in empirical work. One particularly relevant issue is the correct specification of the parametric Arima models for the unobserved components, as the various outputs of the model could be misleading under misspecified dynamics.

The objective of our paper is precisely to derive tests for neglected serial correlation in the underlying elements of univariate unobserved components (Ucarima) models. For computational reasons, we focus most of our discussion on score tests, which only require estimation of the model under the null. As is well known, though, in standard situations likelihood ratio (LR), Wald and Lagrange multiplier (LM) tests are asymptotically equivalent under the null and sequences of local alternatives, and therefore they share their optimality properties. Another important advantage of score tests is that they often coincide with tests of easy to interpret moment conditions (see Newey 1985; Tauchen 1985), which will continue to have non-trivial power even in situations for which they are not optimal.

Earlier work on specification testing in unobserved component models include Engle and Watson (1980), who explained how to apply the LM testing principle in the time domain for dynamic factor models with static factor loadings, Harvey (1989), who provides a detailed discussion of time domain and frequency domain testing methods in the context of univariate “structural time series” models, and Fernández (1990), who applied the LM principle in the frequency domain to a multivariate structural time series model. More recently, in a companion paper (Fiorentini and Sentana 2013) we have derived tests for neglected serial correlation in the latent variables of dynamic factor models using frequency domain techniques.

In the specific context of Ucarima models, the contribution of this paper is threefold.

First, we propose dynamic specification test which are very simple to implement, and even simpler to interpret. Once an model has been specified and estimated, the tests that we propose can be routinely computed from simple statistics of the smoothed values of the innovations of the different components. And even though our theoretical derivations make extensive use of spectral methods for time series, we provide both time domain and frequency domain interpretations of the relevant scores, so researchers who strongly prefer one method over the other could apply them without abandoning their favourite estimation techniques.

Second, we provide a thorough discussion of some common situations in which the standard form of LM tests cannot be computed because the information matrix of the alternative model is singular under the null. In those irregular cases, we derive versions of the score tests that remain asymptotically equivalent to the LR tests, which become one-sided, and explain how to compute asymptotically reliable Wald tests. We also explicitly relate the incidence of those problems to the identification conditions for Ucarima models, and highlight that they contradict the widely held view that increases in the Ma and Ar polynomials of the same order provide locally equivalent alternatives in univariate tests for serial correlation (see e.g. Godfrey 1988).

Third, we compare dynamic specification tests for the underlying components with tests based on the reduced form prediction errors. In this regard, we study their relative power and discuss some cases in which they are numerical equivalent.

The rest of the paper is organised as follows. In Sect. 2, we review the properties of Ucarima models, their estimators and filters. Then, in Sect. 3 we derive our tests and discuss their potential pitfalls, comparing them to reduced form tests in Sect. 4. This is followed by a Monte Carlo evaluation of their finite sample behaviour in Sect. 5. Finally, our conclusions can be found in Sect. 6. Auxiliary results are gathered in Appendices.

2 Theoretical background

As we have just mentioned, in this section we formally introduce Ucarima models, obtain their reduced form representation, review maximum likelihood estimation in the frequency domain, apply Wiener–Kolmogorov filtering theory to optimally extract the unobserved components and derive the time series properties of the smoothed series.

2.1 UCARIMA models

To keep the notation to a minimum throughout the paper we focus on models for a univariate observed series \(y_{t}\) that can be defined in the time domain by the equations:

where \(x_{t}\) is the “signal” component, \( u_{t}\) the orthogonal “non-signal” component, \(\alpha _{x}(L)\) and \(\alpha _{u}(L)\) are one-sided polynomials of orders \(p_{x}\) and \(p_{u}\), respectively, while \(\beta _{x}(L)\) and \( \beta _{u}(L)\) are one-sided polynomials of orders \(q_{x}\) and \(q_{u}\) coprime with \(\alpha _{x}(L)\) and \(\alpha _{u}(L)\), respectively, \(I_{t-1}\) is an information set that contains the values of \(y_{t}\) and \(x_{t}\) up to, and including time \(t-1, \mu \) is the unconditional mean and \({\varvec{\theta }}\) refers to all the remaining model parameters.

Importantly, we maintain the assumption that the researcher makes sure that the parameters \({\varvec{\theta }}\) are identified before estimating the model under the null.Footnote 1 Hotta (1989) provides a systematic way to check for identification (see Maravall 1979 for closely related results). Specifically, let c denote the degree of the polynomial greatest common divisor of \(\alpha _{x}(L)\) and \(\alpha _{u}(L)\), so that they share c common roots. Then, the Ucarima model above will be identified (except at a set of parameter values of measure 0) when there are no restrictions on the Ar and Ma polynomials if and only if either \(p_{x}\ge q_{x}+c+1\) or \(p_{u}\ge q_{u}+c+1\), so that at least one of the components must be a “top-heavy” Arma process in the terminology of Burman (1980) (i.e. a process in which the Ar order exceeds the Ma one).Footnote 2 Given the exchangeability of signal and non-signal components in the formulation above, in what follows we assume without loss of generality that this identification condition is satisfied by the signal component. In particular, we assume that \(p_{x}\ge q_{x}+c+1\) and \(p_{x}-q_{x}\ge p_{u}-q_{u}\), and that in case of equality, \(p_{x}\ge p_{u}\).

In this paper we are interested in hypothesis tests for \(p_{x}=p_{x}^{0}\) vs \(p_{x}=p_{x}^{0}+k_{x}\) or \(p_{u}=p_{u}^{0}\) vs \(p_{u}=p_{u}^{0}+k_{u}\), or the analogous hypotheses for \(q_{x}\) and \(q_{u}\). For simplicity, we focus most of the discussion in those cases in which \(k_{x}\) and \(k_{u}\) are in fact 1, which leads to the following four hypothesis of interest:

-

1.

Sar1: Arma(\(p_{x}+1,q_{x}\)) \(+\) Arma(\(p_{u},q_{u}\))

-

2.

Sma1: Arma(\(p_{x},q_{x}+1\)) \(+\) Arma(\(p_{u},q_{u}\))

-

3.

Nar1: Arma(\(p_{x},q_{x}\)) \(+\) Arma(\(p_{u}+1,q_{u}\))

-

4.

Nma1: Arma(\(p_{x},q_{x}\)) \(+\) Arma(\(p_{u},q_{u}+1\))

Given that they raise no additional issues, extensions to higher \(k_{x}\) and \(k_{u}\) are only briefly discussed in Sect. 3.1 below, as well as in our concluding remarks.

2.2 Reduced form representation of the model

Unobserved component models can readily handle integrated variables, but for simplicity of exposition in what follows we maintain the assumption that \(y_{t}\) is a covariance stationary process, possibly after suitable differencing, as in Appendix 1.

Under stationarity, the spectral density of the observed variable is proportional to

Given that

it follows that the reduced form model will be an Arma process with maximum orders \(p_{y}=p_{x}+p_{u}\) for the Ar polynomial \(\alpha _{y}(.)=\alpha _{x}(.)\alpha _{u}(.)\) and \(q_{y}=\max (p_{x}+q_{u},q_{x}+p_{u})\) for the Ma polynomial \(\beta _{y}(.)\). Cancellation will trivially occur when \(\alpha _{x}(.)\) and \(\alpha _{u}(.)\) share c common roots, but there could also be other cases (see Granger and Morris 1976 for further details). The coefficients of \(\beta _{y}(L)\), as well as \(\sigma _{a}^{2}\), which is the variance of the univariate Wold innovations, \(a_{t}\), are obtained by matching autocovariances (see Fiorentini and Planas 1998 for a comparison of numerical methods). Assuming strict invertibility of the Ma part, we could then obtain the reduced form innovations \(a_{t}\) from the observed process by means of the one-sided filter

But as is well known, these reduced form residuals can also be obtained from the prediction equations of the Kalman filter without making use of the expressions for \(\alpha _{y}(.)\) or \(\beta _{y}(.)\).

2.3 Maximum likelihood estimation in the frequency domain

Let

denote the periodogram of \(y_{t}\) and \(\lambda _{j}=2\pi j/T (j=0,\ldots , T-1)\) the usual Fourier frequencies. If we assume that \(g_{yy}(\lambda )\) is not zero at any of those frequencies, the so-called Whittle (discrete) spectral approximation to the log-likelihood function isFootnote 3

The MLE of \(\mu \), which only enters through \(I_{yy}(\lambda )\), is the sample mean, so in what follows we focus on demeaned variables. In turn, the score with respect to all the remaining parameters is

The information matrix is block diagonal between \(\mu \) and the elements of \( {\varvec{\theta }}\), with the (1, 1)-element being \(g_{yy}(0)\) and the (2, 2)-block

where \(^{*}\) denotes the conjugate transpose of a matrix. A consistent estimator will be provided either by the outer product of the score or by

In fact, by selecting an artificially large value for T in (9), one can approximate (8) to any desired degree of accuracy.

Formal results showing the strong consistency and asymptotic normality of the resulting ML estimators of dynamic latent variable models under suitable regularity conditions were provided by Dunsmuir (1979), who generalised earlier results for Varma models by Dunsmuir and Hannan (1976). These authors also show the asymptotic equivalence between time and frequency domain ML estimators.Footnote 4

2.4 The (Kalman–)Wiener–Kolmogorov filter

By working in the frequency domain we can easily obtain smoothed estimators of the latent variables too. Specifically, let

denote the spectral decomposition of the observed process. The Wiener–Kolmogorov two-sided filter for the signal \(x_{t}\) at each frequency is given by

Hence, the spectral density of the smoother \(x_{t|T}^{K}\) as \(T\rightarrow \infty \) Footnote 5 will be

while the spectral density of the final estimation error \(x_{t}-x_{t|\infty }^{K}\) will be given by

It is easily seen that \(g_{x^{K}x^{K}}(\lambda )\) will approach \( g_{xx}(\lambda )\) at those frequencies for which \(g_{xx}(\lambda )\) is large relatively to \(g_{uu}(\lambda )\), i.e. frequencies with a high signal to noise ratio. In this regard, we can view \(R_{xx}^{2}(\lambda )\) as a frequency-by-frequency coefficient of determination.

Having smoothed \(y_{t}\) to estimate \(x_{t}\), we can easily obtain the smoother for \(f_{t}, f_{t|\infty }^{K}\), by applying to \(x_{t|\infty }^{K}\) the one-sided filter

Likewise, we can derive its spectral density, as well as the spectral density of its final estimation error \(f_{t}-f_{t|\infty }^{K}\).

Entirely analogous derivations apply to the non-signal component \(u_{t}\), with the peculiarity that

so that

Finally, we can obtain the autocovariances of \(x_{t|\infty }^{K}, f_{t|\infty }^{K}, u_{t|\infty }^{K}, v_{t|\infty }^{K}\) and their final estimation errors by applying the usual inverse Fourier transformation

2.5 Autocorrelation structure of the smoothed variables

As we have seen in the previous section, smoothed values of the latent variables are the result of optimal symmetric two-sided filters. As a consequence, their serial correlation structure is generally different from that of the unobserved state variables. To see the difference between the spectra of the signal and its estimators, recall that (10) implies that \(g_{x^{K}x^{K}}(\lambda )<g_{xx}(\lambda )\) for any \(\lambda \in (-\pi ,\pi )\) for which \(g_{uu}(\lambda )>0\). Therefore, the variance of the optimal estimator will underestimate the variance of the unobserved signal, as expected.

As argued by Maravall (1987, 1999, 2003), the serial dependence structure of the estimators of the unobserved components can be a useful tool for model diagnostic. Large discrepancies between theoretical and empirical autocovariance functions of those estimators can be interpreted as indication of model misspecification. On this basis, Maravall (1987) suggested a (Gaussian) parametric bootstrap procedure to obtain confidence intervals for the empirical autocovariances of a single smoothed innovation. Similarly, Maravall (2003) derived expressions for the asymptotic variance of the sampling variances and autocorrelations of the smoothed components using classic results for linear stationary Gaussian processes (see e.g. Lomnicki and Zaremba 1959 or Anderson and Walker 1964). However, in both instances his main objective was to propose useful model diagnostics rather than deriving the null distribution of a formal statistical test. As we shall see in Sect. 3.2, our LM tests carry out the comparison between theoretical and empirical autocovariance functions of the smoothed components in a very precise statistical sense, taking into account both the sampling variability of the estimators of the parameters of the null model and the potential rank failure of the information matrix of the alternative model.

In this regard, an important advantage of our frequency domain approach is that we implicitly compute the required autocovariances without explicitly obtaining the time processes for the unobserved components. Nevertheless, for pedagogical purposes it is of interest to understand those processes.

Given (10), we can write the spectral density of \(x^{K}\) as

which corresponds to an Arma \((p_{x}+q_{y},p_{u}+2q_{x})\) process in the absence of cancellation. Hence, the spectral density of the final estimation error \(x_{t}-x_{t|\infty }^{K}\) in (11) will be

which shares the structure of an Arma \((q_{y},q_{x}+q_{u})\) under the same circumstances.

In turn, the application of (12) to \(x_{t|\infty }^{K}\) implies that the spectral density of \(f_{t|\infty }^{K}\) will be

which suggests an Arma \((q_{y},p_{u}+q_{x})\) process, while

points out instead to an Arma \((q_{y},p_{x}+q_{u})\) for the final estimation error \(f_{t}-f_{t|\infty }^{K}\).

There are special cases, however, in which the resulting models for the smoothed values of the unobserved variables and their innovations are much simpler. For example, if the signal follows a purely autoregressive process and the non-signal component is white noise, so that \(\beta _{x}(L)=\alpha _{u}(L)=\beta _{u}(L)=1\), then

and

with \(p_{y}=q_{y}=p_{x}\).

Once again, entirely analogous derivations apply to the non-signal component \(u_{t|\infty }^{K}\).

3 Neglected serial correlation tests

In this section we begin by reviewing tests for neglected serial correlation in observable processes. Then, we derive the analogous tests for unobserved components, taking into account that the model parameters must be estimated under the null. Next, we investigate the non-standard situations that arise in Ucarima models which become underidentified under some of the alternatives that we consider. We conclude by providing a step-by-step procedure for the benefit of practitioners. For simplicity, we maintain the assumption that there are no common roots in the autoregressive polynomials of the signal and non-signal components.

3.1 Testing for serial correlation in univariate observable processes

For pedagogical purposes, let us initially assume that \(x_{t}\) is an observable univariate time series that has been modelled as an Ar(2) process. A natural generalisation is

so that the null becomes \(H_{0}:\psi _{x}=0\).Footnote 6 Under the alternative, the spectral density of \(x_{t}\) is

where

Hence, the derivative of \(g_{xx}(\lambda )\) with respect to \(\psi _{x}\) under the null is

As a result, the spectral version of the score with respect to \(\psi _{x}\) under \(H_{0}\) is

where we have exploited the fact that

Given that

the spectral version of the score becomes

In turn, the time domain version of the score will be

which is essentially identical because \(\hat{\gamma } _{ff}(T-1)=T^{-1}f_{T}f_{1}=o_{p}(1)\). Therefore, the spectral LM test of Ar(2) versus Ar(3) is simply checking that the first sample (circulant) autocovariance of \(f_{t}\), which are the innovations in the observed process, coincides with its theoretical value under \(H_{0}\), exactly like the usual Breusch (1978)–Godfrey (1978a) serial correlation LM test in the time domain (see also Breusch and Pagan 1980 or Godfrey 1988).

Let us now consider the following alternative generalisation of an Ar(2)

In this case, the null is \(H_{0}:\psi _{f}=0\). In turn, the spectral density of \(x_{t}\) under this alternative is

whose derivative with respect to \(\psi _{f}\) under the null is

Therefore, the spectral LM test of Ar(2) versus Arma(2, 1) will be numerically identical to the corresponding test of Ar(2) versus Ar(3), which confirms that these two alternative hypotheses are locally equivalent for observable time series (see e.g. Godfrey 1988).

Generalisations to test Arma(p, q) vs Arma(p \(+\) k, q) for k \(>\) 1 are straightforward, since they only involve higher order (circulant) autocovariances of \(f_{t}\), as in Godfrey (1978b). Similarly, it is easy to show that Arma(p \(+\) k, q) and Arma(p, q \(+\) k) multiplicative alternatives are also locally equivalent.Footnote 7 Finally, we could also consider (multiplicative) seasonal alternatives.

3.2 Testing for neglected serial correlation in the unobserved components

Let us now consider univariate unobserved components models, which are the objective of our study. Initially, we assume that the “top heavy” signal process is such that \( p_{x}\ge q_{x}+2\), so that the model is identified under each of the four alternatives stated in Sect. 2.1 in view of Hotta’s (1989) results, and postpone the discussion of the other cases to Sects. 3.4 and 3.5.

Let us start by considering neglected serial correlation in the signal. Under alternative Sar1 the model will be

so that the null hypothesis is \(H_{0}:\psi _{x}=0\), as in Sect. 3.1. Given

and (13), after some straightforward manipulations we can prove that the score of the spectral log-likelihood for the observed series \(y_{t}\) under the null will be given by

Once more, the time domain counterpart to the spectral score with respect to \(\psi _{x}\) is (asymptotically) proportional to the difference between the first sample autocovariance of \(f_{t|\infty }^{K}\) and its theoretical counterpart under \(H_{0}\). Therefore, the only difference with the observable case is that the autocovariance of \(f_{t|\infty }^{K}\), which is a forward filter of the Wold innovations of \(y_{t}\), is no longer 0 when \( \psi _{x}=0\), although it approaches 0 as the signal to noise ratio increases. In that case, our proposed tests would converge to the usual Breusch–Godfrey LM tests for neglected serial correlation discussed in Sect. 3.1.Footnote 8

Let us illustrate our test by means of a simple example. Imagine that \(x_{t}\) follows an Ar(2) process while \(u_{t}\) is white noise. The results in Sect. 2.5 imply that when \(\psi _{x}=0, f_{t|\infty }^{K}\) will follow an Ar(2) with an autoregressive polynomial \(\beta _{y}(L)\) that satisfies the condition

so that the smaller \(\sigma _{v}^{2}\) is, the closer \(f_{t|\infty }^{K}\) will be to white noise. In any case, the LM test of \(H_{0}:\psi _{x}=0\) will simply compare the first sample autocovariance of \(f_{t|\infty }^{K}\) with its theoretical value. As we mentioned before, the advantage of our frequency domain approach is that we obtain those autocovariances without explicitly computing \(\sigma _{a}^{2},\beta _{y}(L)\) or indeed \( f_{t|\infty }^{K}\).

In turn, under alternative Sma1 the equation for the signal in (17) is replaced by

so that the null hypothesis becomes \(H_{0}:\psi _{f}=0\). Then, it is straightforward to prove that this test will numerically coincide with the test of \(H_{0}:\psi _{x}=0\) in view of (18), (13) and (16).

On the other hand, under alternative Nar1 the model will be

while the equation for the non-signal component in (19) will be replaced by

under alternative Nma1. The exchangeability of signal and non-signal implies that mutatis mutandis exactly the same derivations apply to tests of neglected serial correlation in \(u_{t}\).

Finally, joint tests that simultaneously look for neglected serial correlation in the signal and non-signal components can be easily obtained by combining the two scores involved.

3.3 Parameter uncertainty

So far we have implicitly assumed known model parameters. In practice, some of them will have to be estimated under the null. Maximum likelihood estimation of the state space model parameters can be done either in the time domain using the Kalman filter or in the frequency domain.

As we mentioned before, the sampling uncertainty surrounding the sample mean \(\mu \) is asymptotically inconsequential because the information matrix is block diagonal. The sampling uncertainty surrounding the other parameters, say \(\varvec{\vartheta }\), is not necessarily so.

The solution is the standard one: replace the inverse of \({\mathcal {I}}_{ \varvec{\psi \psi }}\), which is the (\(\varvec{\psi },\varvec{\psi }\)) block of the information matrix by the (\(\varvec{\psi },\varvec{\psi }\)) block of the inverse information matrix \({\mathcal {I}}^{\varvec{\psi \psi }}=(\mathcal {I }_{\varvec{\psi \psi }}-{\mathcal {I}}_{\varvec{\psi \vartheta }}{\mathcal {I}}_{ \varvec{\vartheta \vartheta }}^{-1}{\mathcal {I}}_{\varvec{\vartheta \psi } })^{-1}\) in the quadratic form that defines the LM test. As usual, this is equivalent to orthogonalising the spectral log-likelihood scores corresponding to the parameters in \(\varvec{\psi }\) with respect to the scores corresponding to the parameters \(\varvec{\vartheta }\) estimated under the null. In this regard, the analytical expressions that we provide for the different derivatives involved can be combined with (9) to obtain computationally efficient expressions for the entire information matrix.

3.4 Potential pitfalls

As we mentioned in Sect. 2.1, we maintain the innocuous assumption that \(p_{x}>q_{x}\), so that the signal component is a “top-heavy” model. However, by increasing the order of the Ma polynomial of the signal, as the Sma1 alternative hypothesis does, the extended Ucarima model may become underidentified despite the original null model being identified. This will happen when \(p_{x}=q_{x}+1\) but \(p_{u}<q_{u}+1\), in which case the null model will be just identified. An important example would be:

with \(f_{t}\) and \(u_{t}\) bivariate white noise orthogonal at all leads and lags. The null hypothesis of interest is \(H_{0}:\psi _{f}=0\), so that the model under the null is a univariate Ar(1) \(+\) white noise process, while the signal under the alternative is an Arma(1, 1) instead with moving average coefficient \(\psi _{f}\). In this context, it is possible to formally prove that

Proposition 1

The score with respect to \(\psi _{f}\) of model (20) reparametrised in terms of \(\gamma _{yy}(0), \gamma _{yy}(1), \alpha \) and \(\psi _{f}\) is 0 when \(\alpha \ne 0\) regardless of the value of \(\psi _{f}.\)

Intuitively, the problem is that \(\psi _{f}\) cannot be identified because the reduced form model for the observed series is an Arma(1, 1) fully characterised by its variance, its first autocovariance and \(\alpha \) under both the null and the alternative. As a result, the original and extended log-likelihood functions would be identical at their respective optima, which in turn implies that the LR and LM tests will be trivially 0.Footnote 9

A more difficult to detect problem arises when the original model is identified under the null hypothesis and the extended model is identified under the alternative but the information matrix of the extended model is singular under the null. Following Sargan (1983), we shall refer to this situation as a first-order underidentified case because in effect the additional parameter is locally identified but the usual rank condition for identification breaks down.

Although this may seem as a curiosity, it turns out that this problem necessarily occurs with the Sar1 alternative hypothesis whenever alternative Sma1 leads to an underidentified model.

Let us study in more detail the Ar(1) plus white noise example discussed in the previous paragraphs, for which (17) reduces to

with \(f_{t}\) and \(u_{t}\) being bivariate white noise orthogonal at all leads and lags. The null hypothesis of interest is \(H_{0}:\psi _{x}=0\), so that the model under the null is still an Ar(1) plus white noise, while the signal under the alternative follows an Ar(2) process. We can then show thatFootnote 10

Proposition 2

The information matrix of model (21) is singular under the null hypothesis \(H_{0}:\psi _{x}=0.\)

As we saw in Sect. 3.1, the intuition is that under the null the score of an additional Ar root is the opposite of the score of an additional Ma root, but the latter is identically 0 at the parameter values estimated for the original Ar(1) plus white noise model in view of Proposition 1. Therefore, a standard LM test is infeasible. In contrast, there is no linear combination of the first three scores that is equal to 0 under \(H_{0}\) when \(\alpha \ne 0\), so we can consistently estimate \(\alpha , \sigma _{f}^{2}\) and \(\sigma _{u}^{2}\) if we impose the null hypothesis when it is indeed true. Likewise, there is no linear combination of the four scores that is equal to 0 when the true values of \(\alpha \) and \(\psi _{x}\) are both different from 0, so again we can consistently estimate \(\sigma _{f}^{2}, \sigma _{u}^{2}, \alpha \) and \(\psi _{x}\) in those circumstances, unlike what happened with model (20). For those reasons, it seems intuitive to report instead either a Wald test or a LR one. However, intuitions sometimes prove misleading.

It turns out that one has to be very careful in computing the significance level for the LR test and especially the Wald test because, as we will discuss below, the asymptotic distribution of the ML estimator of \(\psi _{x}\) will be highly unusual under the null. In contrast, there is a readily available LM-type test along the lines of Lee and Chesher (1986). Specifically, these authors propose to replace the usual score test by what they call an “extremum test”. Given that the first-order conditions are identically 0, their suggestion is to study the restrictions that the null imposes on higher order conditions. An equivalent procedure to deal with the singularity of the information matrix is to choose a suitable reparametrisation. We follow this second route because it will allow us to obtain asymptotically valid LR and Wald tests too.

Our approach is as follows. First, we replace \(\sigma _{f}^{2}\) and \(\sigma _{u}^{2}\) by \(\gamma _{yy}(0)\) and \(\gamma _{yy}(1)\), as in Proposition 1. As the following result shows, this change confines the singularity to the last element of the score.

Proposition 3

The \(\psi _{x}\psi _{x}\) element of the information matrix of model (21) reparametrised in terms of \(\gamma _{yy}(0),\gamma _{yy}(1),\alpha \) and \(\psi _{x}\) is zero under the null hypothesis \( H_{0}:\psi _{x}=0.\)

Second, we replace \(\psi _{x}\) by either \(\sqrt{\varphi }\) (positive root) or \(-\sqrt{\varphi }\) (negative root) and retain the value of \(\varphi \) and the sign of the transformation which leads to the largest likelihood function under the alternative. Using the results of Rotnitzky et al. (2000), we can show that under the null the asymptotic distribution of the ML estimator of \(\varphi \) will be that of a normal variable censored from below at 0. In contrast, the asymptotic distribution of the corresponding estimator of \(\psi _{x}\) will be non-standard, with a faster rate of convergence, half of its density at 0 and the other half equally divided between the positive and negative sides. In this context, the LR test of the null hypothesis \(H_{0}:\varphi =0\) will be a 50:50 mixture of a \(\chi _{0}^{2}\), which is 0 with probability 1, and \(\chi _{1}^{2}\). As for the Wald test, the square t-ratio associated to the ML estimator of \(\varphi \) will share the same asymptotic distribution. In contrast, Wald tests based on \(\psi _{x}\) will have a rather non-standard distribution which will render the t-ratio usually reported for this coefficient very misleading.

The following result explains how to conduct the score-type test.

Proposition 4

The extremum test of the null hypothesis \(H_{0}:\psi _{x}=0\) is based on the influence function

where

Given the scores for \(\gamma _{yy}(0), \gamma _{yy}(1)\) and \(\alpha \) under the null, this means that the extremum test is effectively comparing the second sample autocovariance of \(f_{t|\infty }^{K}\) with its theoretical value after taking into account the estimated nature of those model parameters. Nevertheless, the test must be one-sided because (i) \( \varphi \ge 0\) under the alternative regardless of whether we reparametrise \(\psi _{x}\) as \(\pm \sqrt{\varphi }\) and (ii) the score under the null is the same in both cases, which implies that the Kuhn–Tucker multiplier will also coincide.Footnote 11

Finally, it is worth noting that although \(\psi _{x}\) is not first-order identified because the derivative of the log-likelihood function with respect to this parameter is identically 0 and the expected value of the second derivative under the null is also 0 from Proposition 4, it is locally identified through higher order derivatives.Footnote 12

A somewhat surprising implication of our previous results is that in this instance the usual local equivalence between Ar(1) and Ma (1) alternatives hypotheses for the signal breaks down. In contrast, there are other seemingly locally equivalent alternatives. Specifically, consider the following variation on model (21):

In this case the null hypothesis of interest is \(H_{0}:\delta _{x}=0\), so that the model under the null is still an Ar(1) signal plus white noise, while the signal under the alternative is a “seasonal” Ar(3) with restricted autoregressive polynomial \(1-\alpha L-\delta _{x}L^{2}+\alpha \delta _{x}L^{3}\). The “top-heavy” nature of the signal together with the restrictions on the coefficients imply the model under the alternative should remain identified. We can then show that

Proposition 5

The LM test of the null hypothesis \(H_{0}:\delta _{x}=0\) in model (23) will numerically coincide with a two-sided version of the test discussed in Proposition 4 once we correct for the sampling uncertainty in the estimation of the model parameters under the null.

Nevertheless, such a test is suboptimal for testing the null hypothesis \( H_{0}:\psi _{x}=0\) because it ignores the effective one-sided nature of its alternative.

For reasons analogous to the ones explained in Sect. 3.1, the test in Proposition 5 will also coincide with the LM test of \(H_{0}:\delta _{f}=0\) in the alternative “seasonal” model

which will again be two sided. This equivalence is less obvious than it may seem because the signal follows a “bottom-heavy” process under the alternative. Nevertheless, the fact that the first Ma coefficient is 0 is sufficient to guarantee identifiability in this case.

Another seemingly locally equivalent alternative to the neglected Ar (1) component in the signal arises when we are interested in testing for first order serial correlation in the non-signal component \(u_{t}\). In that case the model under the alternative becomes

with \(f_{t}\) and \(v_{t}\) orthogonal at all leads and lags. The null hypothesis of interest is \(H_{0}:\psi _{u}=0\). Further, we do not expect any singularity to be present under the alternative, on the grounds that the contemporaneous aggregation of Ar(1) \(+\) Ar(1) is an Arma(2, 1). We can then show that

Proposition 6

The LM test of the null hypothesis \(H_{0}:\psi _{u}=0\) in model (25) will numerically coincide with a two-sided version of the test discussed in Proposition 4 once we correct for the sampling uncertainty in the estimation of the model parameters under the null.

As expected, the LM test of the null hypothesis \(H_{0}:\psi _{v}=0\) in the model

will also coincide because the derivatives of \(g_{yy}(\lambda )\) with respect to \(\psi _{v}\) in model (26) and with respect to \( \psi _{u}\) in model (25) only differ in their signs.

3.5 An intermediate case

So far, we have dealt with regular models in which \(p_{x}\ge q_{x}+2\) in Sect. 3.2 and irregular models in which \( p_{x}=q_{x}+1\) but \(p_{u}<q_{u}+1\) in Sect. 3.4. In this section, we study the intermediate case of \(p_{x}=q_{x}+1\) and \( p_{u}=q_{u}+1 \), which shares some features of the other two.

The results in Sect. 2.2 imply that the reduced form of such a model would be Arma(\(p_{x}+p_{u},p_{x}+p_{u}-1\)), whose \( 2p_{x}+2p_{u}\) parameters are generally sufficient to identify the structural parameters of the signal and non-signal components. Similarly, the reduced form models would be Arma(\(p_{x}+p_{u}+1,p_{x}+p_{u}\)) under alternatives Sar1 and Nar1, and Arma(\(p_{x}+p_{u},p_{x}+p_{u}\)) under alternatives Sma1 and Nma1. Since all these reduced form models identify the parameters of the associated structural models, the corresponding information matrices evaluated under the null will generally have full rank. Therefore, tests for neglected first serial correlation in the signal or the noise will usually be well behaved, as in Sect. 3.2.

Nevertheless, it turns out that both tests are numerically identical. To understand the reason, let us look at an Ar(1) \(+\) Ar(1) process, which is the simplest possible example. The joint alternatives that we consider are of the following form:

In this context, we can prove the following proposition:

Proposition 7

The nullity of the information matrix of model (27) is one under the joint null hypothesis \(H_{0}:\psi _{x}=\psi _{u}=0.\)

Not surprisingly, the same is true if we replace any of the Ar alternatives by its Ma counterpart. Intuitively, the reason is the following. The reduced form model under the combination of alternatives Sma1 and Nma1 is an Arma(\(p_{x}+p_{u},p_{x}+p_{u}\)), which does not have enough parameters to identify the structural parameters of the signal and non-signal components.

In principle, it might be possible to reparametrise model (27) in such a way that the single singularity of the information matrix is due to the score of one of the new parameters becoming identically 0. In that case, a square root transformation of this parameter should allow one to derive a joint extremum test of \(H_{0}:\psi _{x}=\psi _{u}=0\) along the lines of Sect. 3.4. In the interest of space, we shall not explore this possibility.

3.6 The tests in practice

Taking into account the theoretical results obtained in the previous sections, the step by step testing procedure for dynamic misspecification of the unobserved components can be described as follows:

-

1.

Estimate the model under the null by maximum likelihood either in the time domain or in the frequency domain, making sure that it satisfies the identification conditions stated in Sect. 2.1.

-

2.

Compute the periodogram of the data with the FFT.

-

3.

Compute the theoretical spectral density of the unobserved components and the observable series at the estimated values of the parameters.

-

4.

Identify the signal with the more “top-heavy” Arma component, so that \( p_{x}-q_{x}\ge 1\) and \(p_{x}-q_{x}\ge p_{u}-q_{u}\), where \(q_{x},q_{u}\) and \(p_{x},p_{u}\) are the orders of the Ma and Ar polynomials (including roots on the unit circle). In case of equality, choose the signal so that \(p_{x}\ge p_{u}\).

-

5.

If \(p_{x}-q_{x}>1\), so that the model remains identified under all four alternatives and their combinations, then apply the following steps to both the signal and non-signal components:

-

(a)

Compute the scores under the alternative but evaluate them at the null.

-

(b)

Compute the information matrix under the alternative but evaluate it at the null.

-

(c)

Invert the information matrix and retain the elements corresponding to the scores of the additional parameters.

-

(d)

Compute the two quadratic forms defining the LM test statistics.

-

(a)

-

6.

If \(p_{x}-q_{x}=1\) and \(p_{u}-q_{u}=1\), so that the model becomes underidentified under the combination of alternatives Sma1 and Nma1, then proceed as in point 5., but compute only one of the tests since the other one is numerically identical.

-

7.

If \(p_{x}-q_{x}=1\) and \(p_{u}-q_{u}<1\), so that the model becomes underidentified under the Sma1 alternative:

-

(a)

Reparametrise the model as explained in Sect. 3.4.

-

(b)

Compute the scores under the alternative but evaluate them at the null.

-

(c)

Compute the information matrix under the alternative but evaluate it at the null.

-

(d)

Invert the information matrix and retain the element corresponding to the score of the additional parameter.

-

(e)

Compute the quadratic form defining the LM test statistic, and identify it with the dynamic misspecification test for the non-signal component.

-

(f)

If the score associated to the new autoregressive signal parameter is negative, set the dynamic misspecification test for the signal component to 0.

-

(g)

Otherwise, set it to the same value as the non-signal test, but use a one-sided critical value.

-

(a)

An example of a regular situation would be an Ar(2) \(+\) noise process, which is such that \(p_{x}-q_{x}=2\) and \(p_{u}-q_{u}=0\). In this case, the model is identified under all possible alternative hypotheses. In fact, it is overidentified when testing dynamic misspecification in the noise while it is just identified in the Sma1 case.

An example of the intermediate case would be an Ar(1) \(+\) Ar (1) process, for which \(p_{x}-q_{x}=p_{u}-q_{u}=1\).

An example of an irregular case would be an Ar(1) \(+\) noise plus noise model, including the popular random walk plus noise process. In this instance, \(p_{x}-q_{x}=1\) and \(p_{u}-q_{u}=0\). As we mentioned at the beginning of Sect. 3.4, this model becomes an Arma(1, 1) plus noise model under the Sma1 alternative, whose parameters are not identified.

We will study the finite sample behaviour of our tests for unit root versions of the first and third examples in Sects. 5.1 and 5.2, respectively.

4 Comparison with tests based on the reduced form residuals

In the context of univariate time series models written in state space form, Harvey (1989), Harvey and Koopman (1992) and Durbin and Koopman (2012) suggest the calculation of neglected serial correlations tests for the reduced form residuals, \(a_{t}\), which should be white noise under the null of correct dynamic specification. For that reason, it is of some interest to compare such tests to the tests that we have derived in the previous sections. To do so, let us introduce the following two alternative hypothesis of interest:

-

5.

Rar1: Arma(\(p_{x}+1,q_{x}\)) \(+\) Arma(\(p_{u}+1,q_{u}\)) with a common Ar root.

-

6.

Rma1: Arma(\(p_{x},q_{x}+1\)) \(+\) Arma(\(p_{u},q_{u}+1\)) with a common Ma root.

In this context, we can prove the following result:

Proposition 8

Testing for Rar1 in the Ucarima model (1)–(4) is equivalent to testing for Ar(1)-type neglected serial correlation in the reduced form innovations, while testing for Rma1 in the structural form is the same as testing for Ma(1)-type neglected serial correlation in \(a_{t}.\)

This means that when we test for first order neglected serial correlation in the reduced form residuals the model under the alternative hypothesis is in effect:

In contrast, a test for neglected serial correlation in the signal makes use of the alternative model (17), while a test for neglected serial correlation in the non-signal component relies on (19). Therefore, while it is indeed true that misspecification of the dynamics of any of the components will generally result in the reduced form residuals of the null model being serially correlated under the alternative, as argued by Harvey and Koopman (1992), it does not necessarily follow that tests for neglected serial correlation in those residuals are asymptotically equivalent to our neglected serial correlation tests in the unobserved components.

In fact, the relative power of those three tests when \(p_{x}-q_{x}>1\) will depend on the nature of the true model under the alternative. Specifically, if we represent \(\psi _{x}\) on the horizontal axis and \(\psi _{u}\) on the vertical axis, the reduced form test of the null hypothesis \(H_{0}:\psi _{a}=0\) will have maximum power for alternatives along the 45\(^{\circ }\) degree line \(\psi _{u}=\psi _{x}\) since it is locally the best test of neglected serial correlation in that direction in view of Proposition 8. In contrast, the structural form tests of the null hypotheses \(H_{0}:\psi _{x}=0\) and \(H_{0}:\psi _{u}=0\) will have maximum power along their respective axis (see Demos and Sentana 1998 for a related discussion in the context of Arch tests). For the intermediate parameter combinations, we could use local power calculations along the lines of appendix B in Fiorentini and Sentana (2015) to compare our LM tests, which are based on the smoothed innovations of the state variables (the so-called auxiliary residuals), to the LM tests based on the reduced form innovations.Footnote 13 Specifically, we could obtain two isopower lines, defined as the locus of points in \(\psi _{x},\psi _{u}\) space for which for which the non-centrality parameter of the reduced form test is exactly the same as the non-centrality parameter of the structural tests for \(H_{0}:\psi _{x}=0\) and \(H_{0}:\psi _{u}=0\).

In principle, we could consider the joint test of the composite null hypothesis \(H_{0}:\psi _{x}=\psi _{u}=0\) mentioned at the end of Sect. 3.2, which will generally have two degrees of freedom instead. For comparing the joint test against the simple tests, though, we would have to equate their local power directly since the number of degrees of freedom would be different.

In view of the discussion in Sects. 3.4 and 3.5, though, the reduced form test and the two sided versions of the structural tests will be identical when \(p_{x}-q_{x}=1\).

5 Monte Carlo simulation

5.1 A regular case

We first report the results of some simulation experiments based on a special case of the example discussed at the end of Sect. 3.2, in which the autoregressive polynomial of the signal contains a unit root. In this way, we can assess the finite sample reliability of the size of our proposed tests and their power relative to the reduced form test in a realistic situation in which the model remains identified under each of the four alternatives stated in Sect. 2.1.

5.1.1 Size experiment

To evaluate possible finite sample size distortions, we generate 10,000 samples of length 200 (equivalent to 50 years of quarterly data) of the following model

with \(f_{t}\) and \(u_{t}\) being contemporaneously uncorrelated bivariate Gaussian white noise. Thus, the signal component follows an Ari(1, 1) under the null, while the non-signal component is white noise. Given that \(\mu \) is inconsequential, we fix its true value to 0. We also fix the variance of \(u_{t}\) to 1 without loss of generality. As for the remaining parameters, we choose \(\sigma _{f}^{2}=1\) and \(\alpha =.7\) to clearly differentiate this design from the model in Sect. 5.2.

For each simulated sample, we use the first differences of the data to compute the following LM tests:

-

1.

first-order neglected serial correlation in the signal (\(\chi _{1}^{2}\))

-

2.

first-order neglected serial correlation in the non-signal (\(\chi _{1}^{2}\))

-

3.

first-order neglected serial correlation in the reduced form residuals (\(\chi _{1}^{2}\))

-

4.

Joint tests of null hypotheses in points 1. and 2. (\(\chi _{2}^{2}\)).

The finite sample sizes for the four tests are displayed in the first panel of Table 1. As can be seen, the actual rejection rates at the 10, 5 and 1 % of all four tests fall within the corresponding asymptotic confidence intervals of (9.41, 10.59), (4.57, 5.43) and (.80, 1.20), so one can reliably use them.

5.1.2 Power experiments

Next, we simulate and estimate 5,000 samples of length 200 of DGPs in which either the signal or the noise may have an additional autoregressive root, with everything else being unchanged. In particular, we consider the following four alternatives:

-

(a)

neglected serial correlation in the signal (\(\psi _{x}=.5;\psi _{u}=0\)), for which the LM test in point 1. should be optimal

-

(b)

neglected serial correlation in the noise (\(\psi _{x}=0;\psi _{u}=.5\)), for which the LM test in point 2. should be optimal

-

(c)

symmetric neglected serial correlation in signal and noise (\(\psi _{x}=.5;\psi _{u}=.5\)), for which the residual LM test in point 3. should be optimal

-

(d)

asymmetric neglected serial correlation in signal and noise (\(\psi _{x}=.6;\psi _{u}=.3\)) for which the joint LM test in point 4. should be optimal.

The raw rejection rates are reported in the last four panels of Table 1. For alternative (a), the ranking of the tests is as expected. However, for alternative (b) the LM test for signal is able to match the power of the LM test for noise, closely followed by the residual and joint LM tests. Therefore, misspecification in the serial correlation of the non-signal component seems to substantially alter the serial correlation pattern of the filtered values of the correctly specified signal component because the parameter estimators at which the filter is evaluated are biased and the filter weights would be the wrong ones even if we knew the true values of the estimated parameters.

The most surprising result corresponds to alternative (c), in that the joint LM test has more power than the asymptotically optimal reduced form test. In contrast, the rejection rates for alternative (d) conform to the theoretical predictions.

In summary, our results show that the tests that look for neglected serial correlation in the signal and the noise, either separately or jointly, tend to dominate in terms of power the traditional tests based on the reduced form innovations.

5.2 Local level model

Next we analyze the local level model in Appendix 1, which is a rather important practical example of the situation discussed in Sect. 3.4.

5.2.1 Size experiment

To evaluate possible finite sample size distortions, we generate 10,000 samples of length 200 of the following model

with \(f_{t}\) and \(u_{t}\) being contemporaneously uncorrelated bivariate Gaussian white noise. As before, we fix the true value of \(\mu \) to 0 and the variance of \(u_{t}\) to 1 without loss of generality. Therefore, the design depends on a single parameter: the noise to signal ratio \(\sigma _{f}^{2}\), which we choose to be 1. This choice implies a Mean Square Error of the final estimation error of \(f_{t}\) relative to \(\sigma _{f}^{2}\) of 55.28 % according to expression (33), which is neither too low nor too high.

For each simulated sample, we use the first differences of the data to compute the following statistics:

-

1.

one-sided versions of the extremum test for first-order neglected serial correlation in the signal

-

2.

two-sided version of the same test

-

3.

likelihood ratio version

-

4.

Wald test based on \(\varphi \)

-

5.

Wald test based on \(\psi _{x}\)

-

6.

second-order neglected serial correlation in the signal

-

7.

first-order neglected serial correlation in the non-signal

-

8.

first-order neglected serial correlation in the reduced form residuals.

As expected from the theoretical results in Sect. 3.4, the test statistics in points 2., 6., 7. and 8. are numerically identical, so we only report one of them under the label LM2S.

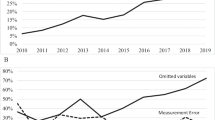

It is also important to emphasise that the statistics in points 3., 4. and 5. require the estimation of model (29). For the reasons described in Sect. 3.4, this is a non-trivial numerical task because when its true value is 0 (i) approximately half of the ML estimators of \( \psi _{x}\) are identically 0; (ii) the log-likelihood function is extremely flat in a neighbourhood of 0, especially if we parametrise it in terms of \( \psi _{x}\); and (iii) when the maximum is not 0 it tends to have two commensurate maxima for positive and negative values of \(\psi _{x}\). To make sure we have obtained the proper ML estimate, we maximise the spectral log-likelihood of model (29) four times: for positive and negative values of \(\psi _{x}\) and with this parameter replaced by \(\pm \sqrt{\varphi }\), retaining the maximum maximorum. A kernel density estimate of the mixed-type discrete-continuous distribution of the ML estimators is displayed in Fig. 1, with its continuous part scaled so that it integrates to .48, which is the fraction of non-zero estimates of \(\psi _{x}\). In addition to bimodality, the sampling distribution shows positive skewness, which nevertheless tends to disappear in non-reported experiments with \( T=10,000\). The remaining 52 % of the estimates of \(\psi _{x}\) are 0, in which case the test statistics in points 1., 3., 4. and 5. will all be 0 too.

The rejection rates under the null for the tests at the 10, 5 and 1 % are displayed in Table 2. The only procedure which seems to have a reliable size is the two-sided LM test. In contrast, its one-sided version is somewhat conservative, while the LR and especially the two Wald tests are liberal. Reassuringly, though, the size distortions of the one-sided LM test disappear fairly quickly in non-reported experiments with larger sample sizes, while the distortions of the LR and Wald tests for \(\varphi \) go down more slowly and are still noticeable even in samples as big as \(T=50{,}000\) despite the fact that the fraction of 0 estimates converges very quickly to 1/2. As expected, though, the distortions of the Wald test based on \(\psi _{x}\) persist no matter how big the sample size is because the information matrix for this parametrisation is singular.

5.2.2 Power experiments

Next, we simulate and estimate 5000 samples of length 200 of four alternative DGPs analogous to the ones described in a.-d. of the previous section. However, since our focus is on tests of the null hypothesis \(H_{0}:\psi _{x}=0\), we only estimate the model under the null and under the a. alternative. In this regard, an additional issue that we encounter in some designs is that from time to time the estimated value of \( \sigma _{u}^{2}\) is 0. In those “pile-up” cases we compute the LM and Wald tests excluding the corresponding row and column of the information matrix.

Given the substantial size distortions, we report not only raw rejection rates based on asymptotic critical values in Table 3 but also size-adjusted ones in Table 4, which exploit the Monte Carlo critical values obtained in the simulation described in the previous subsection. If we focus on this second table, we can conclude that the tests that explicitly acknowledge the implicit one-sided nature of the alternative to \(H_{0}:\psi _{x}=0\) dominate the two-sided test, except when \(\psi _{x}=0\) but \(\psi _{u}=.5\), when they tend to be equally powerful. In particular, the one-sided tests for \( H_{0}:\psi _{x}=0\) dominate the residual correlation tests even when \(\psi _{x}=\psi _{u}=.5\).

We can also conclude that the relative ranking of the extremum, LR and Wald tests for \(H_{0}:\varphi =0\) depends on the DGP, although when it coincides with the alternative for which they are asymptotically optimal, the extremum test dominates the LR test, which in turn dominates the Wald test.

6 Conclusions and extensions

We have derived computationally simple and intuitive expressions for score tests of neglected serial correlation in unobserved component univariate models using frequency domain methods. Our tests can focus on the state variables individually or jointly. The implicit orthogonality conditions are analogous to the conditions obtained by treating the Wiener–Kolmogorov–Kalman smoothed estimators of the innovations in the latent variables as if they were observed, but they account for their final estimation errors.

In some common situations in which the information matrix of the alternative model is singular under the null we show that contrary to popular belief it is possible to derive extremum tests that are asymptotically equivalent to likelihood ratio tests, which become one-sided. We also explain how to compute asymptotically reliable Wald tests. As a result, from now on empirical researchers would be able to report test statistics in those irregular situations too. Further, we explicitly relate the incidence of those problems to the model identification conditions and compare our tests with tests based on the reduced form prediction errors.

We conduct Monte Carlo exercises to study the finite sample reliability and power of our proposed tests. In the regular case of a latent Ari(1, 1) process cloaked in white noise, our results show that the finite sample size of the different tests is reliable. They also imply that the tests that look for neglected serial correlation in the signal and the noise, either separately or jointly, dominate in terms of power the traditional tests based on the reduced form innovations.

When we look at neglected serial correlation tests in the irregular local level model, our simulation results confirm that the finite sample distribution of the ML estimator of the additional autoregressive root in the signal is highly unusual under the null of correct specification, with almost half its mass at 0 and two modes, one positive and one negative. Not surprisingly, a Wald test based on this parameter is highly unreliable, even asymptotically. We also find some size distortions for the asymptotically valid one-sided tests of \(H_{0}:\psi _{x}=0\) (but not for the two-sided LM test), which nevertheless progressively disappear as the sample size increases. After correcting for those distortions, though, we find that the one-sided tests dominate the residual correlation tests even when \(\psi _{x}=\psi _{u}=.5\), but the relative ranking of the extremum test, the likelihood ratio test and the Wald test depends on the DGP under the alternative.

Although we have considered reasonable Monte Carlo designs, a more through analysis of the determinants of the size and power properties of the different tests would constitute a valuable addition.

The testing procedures we have developed can be extended in several interesting directions. First, it would be tedious but straightforward to consider models with more than two components after dealing with identification issues. More interestingly, we could also consider models with purely seasonal components (see Harvey 1989 for some examples). Tests of higher order serial correlation also deserve further consideration since they might involve singularity problems too. For example, the Ari(1, 1) plus white noise process discussed in Sect. 5.1, which yields standard test statistics for neglected first order serial correlation, gives rise to a singular information matrix when we consider tests against first and second order serial correlation simultaneously because those tests are numerically equivalent to tests against the underidentified alternative of Arima(1, 1, 2) plus white noise.

Second, we have assumed throughout the paper that the model estimated under the null is parametrically identified. Nevertheless, Harvey (1989) discusses some examples in which an Ucarima model is underidentified under the null but identified under the alternative. He formally tackles the problem by using the procedure proposed by Aitchison and Silvey (1960), which effectively adds a matrix to the information matrix to make sure that it has full rank (see also Breusch 1986).

We have also maintained the assumption of normality. To understand its implications, let \(\mu _{t|t-1}\) and \(\sigma _{t|t-1}^{2}\) denote the conditional mean and variance of \(y_{t}\) given its past alone, which can be obtained from the prediction equations of the Kalman filter. Given that the additional serial correlation parameters effectively enter through \(\mu _{t|t-1}\) only, we would expect the asymptotic distribution of our proposed tests to remain valid in the presence of non-Gaussian innovations. Dunsmuir (1979) provides a formal result which confirms our conjecture for the important class of Ar(p) plus noise processes.

Although we have only considered unobserved components with rational spectral densities, in principle our methods could be applied to long memory processes too. In this regard, it would be worth exploring the fractionally integrated alternatives considered by Robinson (1994). More generally, it would also be interesting to consider non-parametric alternatives such as the ones studied by Hong (1996), in which the lag length is implicitly determined by the choice of bandwidth parameter in a kernel-based estimator of a spectral density matrix. Another potential extension would directly deal with non-stationary models without transforming the observed variables to achieve stationarity. All these topics constitute fruitful avenues for future research.

Notes

But see Sect. 6 for a brief discussion of models that are underidentified under the null but identified under the alternative.

Although strictly speaking Proposition 2 in Hotta (1989) applies to stationary models, the emphasis on common roots is particularly important in the presence of integrated components, in which case \(p_{x}\) and \(p_{u}\) would represent the total number of Ar roots, including those on the unit circle (see Harvey 1989 for further details).

There is also a continuous version which replaces sums by integrals (see Dunsmuir and Hannan 1976).

This equivalence is not surprising in view of the contiguity of the Whittle measure in the Gaussian case (see Choudhuri et al. 2004).

The main difference between the Wiener–Kolmogorov filtered values, \( x_{t|\infty }^{K}\), and the Kalman filter smoothed values, \(x_{t|T}^{K}\), results from the dependence of the former on a double infinite sequence of observations (but see Levinson 1947). As shown by Fiorentini (1995) and Gómez (1999), though, they can be made numerically identical by replacing both pre- and post-sample observations by their least squares projections onto the linear span of the sample observations.

This is a multiplicative alternative. Instead, we could test \(H_{0}:\alpha _{x3}=0\) in the additive alternative

$$\begin{aligned} (1-\alpha _{x1}L-\alpha _{x2}L^{2}-\alpha _{x3}L^{3})x_{t}=f_{t}. \end{aligned}$$In that case, it would be more convenient to reparametrise the model in terms of partial autocorrelations (see Barndorff-Nielsen and Schou 1973). We stick to multiplicative alternatives, which are closer related to Ma alternatives.

It would also be possible to develop tests of Arma(p, q) against Arma(p \(+\) k, q \(+\) k) along the lines of Andrews and Ploberger (1996). We leave those tests, which will also depend on the differences between sample and population autocovariances of \(f_{t}\), for future research.

Given that \(\sigma _{f}^{2}=g_{f^{K}f^{K}}(\lambda )+\omega _{ff}(\lambda )\) for all \(\lambda \), we can also write the score as \(2\sum \nolimits _{j=0}^{T-1}\cos \lambda _{j}[2\pi I_{f^{K}f^{K}}(\lambda _{j})+\omega _{ff}(\lambda _{j})]\) in view of (14). Therefore, the score with respect to \(\psi _{x}\) also has the interpretation of the expected value of (15), which is score when \(x_{t}\) is observed, conditional on the past, present and future values of \(y_{t}\) (see Fiorentini et al. 2014 for further details).

The only possible exception arises when the model is exactly on the boundary of the admissibility region under the null but not under the alternative. However, such anomalies tend to be associated to uninteresting cases. For example, in the Ar(1) plus noise model the null parameter configuration will be at the boundary of the admissible parameter space if and only if the non-signal component is identically 0 (see Harvey 1989; Fiorentini and Planas 2001 for other examples of admissibility restrictions on the model parameters).

Harvey (1989) proved the same result in the special case of \(\alpha =1\), which we discuss in detail in Appendix 1.

See the proof of Proposition 4 for details.

Unlike in Fiorentini and Sentana (2015), though, the scores with respect to \( \psi _{x}\) and \(\psi _{u}\) will not be orthogonal to the scores with respect to the remaining structural parameters, \(\varvec{\vartheta }\). For that reason, we should conduct the local power calculations with the orthogonalised scores, which are the residuals in the regression of the scores for \(\psi _{x}\) and \(\psi _{u}\) on the scores that define the estimated parameters, with the covariance matrices computed under the null. This procedure would not only reflect the fact that the quadratic form that defines the non-centrality parameter requires the relevant block of the inverse, as opposed to the inverse of the relevant block, but it would also take into account that the expected Jacobian of the other scores with respect to \(\psi _{x}\) and \(\psi _{u}\) will not be 0. Exploiting the information matrix equality, this effectively implies that the non-centrality parameter will be a quadratic form in the direction of departure from the null with a weighting matrix equal to \({\mathcal {I}}_{ \varvec{\psi \psi }}-{\mathcal {I}}_{\varvec{\psi \vartheta }}{\mathcal {I}}_{ \varvec{\vartheta \vartheta }}^{-1}{\mathcal {I}}_{\varvec{\vartheta \psi }}\).

References

Aitchison J, Silvey SD (1960) Maximum-likelihood estimation procedures and associated tests of significance. J R Stat Soc Ser B 22:154–71

Anderson TW, Walker AM (1964) On the asymptotic distribution of the autocorrelations of a sample from a linear stochastic process. Ann Math Stat 35:1296–1303

Andrews DWK, Ploberger W (1996) Testing for serial correlation against an ARMA(1,1) process. J Am Stat Assoc 91:1331–1342

Barndorff-Nielsen O, Schou G (1973) On the parametrization of autoregressive models by partial autocorrelations. J Multivar Anal 3:408–419

Box GEP, Hillmer S, Tiao GC (1978) Analysis and modeling of seasonal time series. In: Zellner A (ed) Seasonal analysis of economic time series. U.S. Department of Commerce, Bureau of the Census, Washington, DC, pp 309–344

Breusch TS (1978) Testing for autocorrelation in dynamic linear models. Aust Econ Pap 17:334–355

Breusch TS (1986) Hypothesis testing in unidentified models. Rev Econ Stud 53:635–651

Breusch TS, Pagan AR (1980) The Lagrange multiplier test and its applications to model specification in econometrics. Rev Econ Stud 47:239–253

Burman JP (1980) Seasonal adjustment by signal extraction. J R Stat Soc Ser A 143:321–337

Choudhuri N, Ghosal S, Roy A (2004) Contiguity of the Whittle measure for a Gaussian time series. Biometrika 91:211–218

Commandeur JJF, Koopman SJ, Ooms M (2011) Statistical software for state space methods. J Stat Softw 41:1–18

Demos A, Sentana E (1998) Testing for GARCH effects: a one-sided approach. J Econom 86:97–127

Dunsmuir W (1979) A central limit theorem for parameter estimation in stationary vector time series and its application to models for a signal observed with noise. Ann Stat 7:490–506

Dunsmuir W, Hannan EJ (1976) Vector linear time series models. Adv Appl Probab 8:339–364

Durbin J, Koopman SJ (2012) Time series analysis by state space methods, 2nd edn. Oxford University Press, Oxford

Engle RF (1978) Estimating structural models of seasonality. In: Zellner A (ed) Seasonal analysis of economic time series. U.S. Department of Commerce, Bureau of the Census, Washington, DC, pp 281–297

Engle RF, Watson MW (1980) Formulation générale et estimation de models multidimensionnels temporels a facteurs explicatifs non observables. Cahiers du Séminaire d’Économétrie 22:109–125

Fernández FJ (1990) Estimation and testing of a multivariate exponential smoothing model. J Time Ser Anal 11:89–105

Fiorentini G (1995) Conditional heteroskedasticity: some results on estimation, inference and signal extraction, with an application to seasonal adjustment. Unpublished Doctoral Dissertation, European University Institute

Fiorentini G, Paruolo P (2009) Testing residual autocorrelation in autoregressive processes with a zero root. Mimeo, Università di Firenze

Fiorentini G, Planas C (1998) From autocovariances to moving average: an algorithm comparison. Comput Stat 13:477–484

Fiorentini G, Planas C (2001) Overcoming nonadmissibility in ARIMA-model-based signal extraction. J Bus Econ Stat 19:455–64

Fiorentini G, Sentana E (2013) Dynamic specification tests for dynamic factor models. CEMFI Working Paper 1306

Fiorentini G, Sentana E (2015) Tests for serial dependence in static, non-Gaussian factor models. In: Koopman SJ, Shephard N (eds) Unobserved components and time series econometrics. Oxford University Press, Oxford (forthcoming)

Fiorentini G, Galesi A, Sentana E (2014) A spectral EM algorithm for dynamic factor models. CEMFI Working Paper 1411

Godfrey LG (1978a) Testing against general autoregressive and moving average error models when the regressors include lagged dependent variables. Econometrica 46:1293–1301

Godfrey LG (1978b) Testing for higher order serial correlation in regression equations when the regressors include lagged dependent variables. Econometrica 46:1303–1310

Godfrey LG (1988) Misspecification tests in econometrics: the Lagrange multiplier principle and other approaches. Cambridge University Press, Cambridge

Gómez V (1999) Three equivalent methods for filtering finite nonstationary time series. J Bus Econ Stat 17:109–116

Granger CWJ, Morris MJ (1976) Time series modelling and interpretation. J R Stat Soc Ser A 139:246–257

Harvey AC (1989) Forecasting, structural models and the Kalman filter. Cambridge University Press, Cambridge

Harvey AC, Koopman SJ (1992) Diagnostic checking of unobserved components time series models. J Bus Econ Stat 10:377–389

Hong Y (1996) Consistent testing for serial correlation of unknown form. Econometrica 64:837–864

Hotta LK (1989) Identification of unobserved components models. J Time Ser Anal 10:259–270

Koopman SJ, Harvey AC, Doornik JA, Shephard N (2009) STAMP 8.2: structural time series analyser, modeller and predictor. Timberlake Consultants Press, London

Lee LF, Chesher A (1986) Specification testing when score test statistics are identically zero. J Econom 31:121–149

Levinson N (1947) The Wiener RMS error criterion in filter design and prediction. J Math Phys 25:261–278

Lomnicki Z, Zaremba SK (1959) On some moments and distributions occurring in the theory of linear stochastic processes, II. Monatshefte für Mathematik 63:128–168

Maravall A (1979) Identification in dynamic shock-error models. Springer, Berlin

Maravall A (1987) Minimum mean squared error estimation of the noise in unobserved component models. J Bus Econ Stat 5:115–120

Maravall A (1999) Unobserved components in economic time series. In: Pesaran MH, Wickens MR (eds) Handbook of applied econometrics. Volume I: Macroeconomics. Blackwell, Oxford, pp 12–72

Maravall A (2003) A class of diagnostics in the ARIMA-model-based decomposition of a time series. In: Manna M, Peronaci R (eds) Seasonal adjustment. European Central Bank, Frankfurt, pp 23–36

Nerlove M (1967) Distributed lags and unobserved components of economic time series. In: Fellner WEA (ed) Ten economic essays in the tradition of Irving Fisher. Wiley, New York, pp 126–169

Nerlove M, Grether D, Carvalho J (1979) Analysis of economic time series, a synthesis. Academic Press, New York

Newey WK (1985) Maximum likelihood specification testing and conditional moment tests. Econometrica 53:1047–70

Pierce DA (1978) Seasonal adjustment when both deterministic and stochastic seasonality are present. In: Zellner A (ed) Seasonal analysis of economic time series. U.S. Department of Commerce, Bureau of the Census, Washington, DC, pp 242–269

Priestley MB (1981) Spectral analysis and time series, vol I, II. Academic Press, London

Robinson PM (1994) Efficient tests of nonstationary hypothesis. J Am Stat Assoc 89:1420–1437

Rotnitzky A, Cox DR, Bottai M, Robins J (2000) Likelihood-based inference with singular information matrix. Bernoulli 6:243–284

Sargan JD (1983) Identification and lack of identification. Econometrica 51:1605–1634

Tauchen G (1985) Diagnostic testing and evaluation of maximum likelihood models. J Econom 30:415–443

Whittle P (1962) Gaussian estimation in stationary time series. Bull Int Stat Inst 39:105–129

Author information

Authors and Affiliations

Corresponding author

Additional information

We are grateful to Agustín Maravall and Andrew Harvey, as well as audiences at the V Italian Congress of Econometrics and Empirical Economics (Salerno, 2015) and the V Workshop in Time Series Econometrics (Zaragoza, 2015) for helpful comments and discussions. The suggestions of Victor Aguirregabiria (the editor) and a referee have also improved the presentation of the paper. Of course, the usual caveat applies. Financial support from MIUR through the project “Multivariate statistical models for risk assessment” (Fiorentini) and the Spanish Ministries of Science and Innovation and Economy and Competitiveness through grants ECO 2011-26342 and 2014-59262, respectively (Sentana), is gratefully acknowledged.

Appendices

Appendix 1: Local level model

1.1 Testing for neglected serial correlation in the trend

1.1.1 Against AR(1) alternatives

Consider the following modified version of model (21)

with \(f_{t}\) and \(u_{t}\) orthogonal at all leads and lags. The main difference is that we have replaced the covariance stationarity hypothesis for the signal \(x_{t}\) by a unit root one. As before, the null hypothesis of interest remains \(H_{0}:\psi _{x}=0\), so that the model under the null is simply a random walk signal plus white noise, while the signal under the alternative is an Ari(1, 1) with autoregressive coefficient \(\psi _{x}\).

In order to use spectral methods we need to take first differences of the observed variable to make it stationary, which yields

Hence, it is easy to see that

Similarly, the spectral density of \(\Delta y_{t}\) will be

The reduced form of \(\Delta y_{t}\) is an Ima(1, 1) process with Ma coefficient \(\beta _{y}\) given by

where \(q=\sigma _{f}^{2}/\sigma _{u}^{2}\) denotes the signal to noise ratio, and residual variance

As is well known (see e.g. Priestley 1981, section 10.3), the variance of the final estimation error of \(f_{t}\) will be given by

because

and

Interestingly, we would obtain exactly the same expression by working with pseudo-spectral densities in levels because

The partial derivatives of the spectral density are

Under the null of \(H_{0}:\psi _{x}=0\) those derivatives become

which implies that

for all \(\lambda \). Obviously, exactly the same linear combination of the elements of \(g_{yy}^{-1}(\lambda )\partial g_{yy}(\lambda )/\partial {\varvec{\theta }}\) will be singular too. Therefore, the information matrix of the model, which is given by

will only have rank 3 under the null. In view of this result, Harvey (1989) rightly concludes that a standard LM test is infeasible.

In contrast, there is no linear combination of the first two derivatives that is equal to 0 under \(H_{0}\), so we can consistently estimate \(\sigma _{f}^{2}\) and \(\sigma _{u}^{2}\) if we impose the null hypothesis when it is indeed true. Likewise, there is no linear combination of the three derivatives that is equal to 0 under the alternative either, so again we can consistently estimate \(\sigma _{f}^{2}, \sigma _{u}^{2}\) and \(\psi _{x}\) in those circumstances. For that reason, Harvey (1989) recommends reporting either a Wald test or a LR one, which for reasons explained in Sect. 3.4 turns out not to be sound advice.

Nevertheless, an LM-type test is readily available once more along the same lines as in Sect. 3.4. Specifically, we can tackle the problem created by (34) by reparametrisation. First, we are going to replace \(\sigma _{f}^{2}\) and \(\sigma _{u}^{2}\) by \(\gamma _{\Delta y\Delta y}(0)\) and \(\gamma _{\Delta y\Delta y}(1)\). Thus, it is easy to see from (31) and (32) that

which are well defined as long as \(\psi _{x}\ne -\frac{1}{2}\) (or if \( \gamma _{\Delta y\Delta y}(0)+2\gamma _{\Delta y\Delta y}(1)=0\) when \(\psi _{x}\ne -\frac{1}{2}\)).

With this notation, the spectral density becomes

The derivatives with respect to these new parameters are

Under the null of \(H_{0}:\psi _{x}=0\), these scores reduce to

Although we have not yet eliminated the singularity, we have at least confined it to the last element of the score. If we further reparametrise \( \psi _{x}\) as \(\pm \sqrt{\varphi }\), the spectral density becomes

Tedious algebra shows that the \(\partial g_{\Delta y\Delta y}(\lambda )/\partial \varphi \) evaluated at \(\varphi =0\) will be equal to

where we have used the fact that

under the null. Hence, the extremum test for \(\psi _{x}\), which coincides with the LM test for \(\varphi \), is going to be based on the second autocovariance of the smoothed estimates of \(f_{t}\). Importantly, Lee and Chesher (1986) show that the one-sided version of this extremum test continues to be asymptotically equivalent to both the LR and a one-sided version of the Wald test for \(\varphi \).

1.1.2 Against MA(1) alternatives

Consider now the following variation on model (30):

with \(f_{t}\) and \(u_{t}\) orthogonal at all leads and lags. The null hypothesis of interest is \(H_{0}:\psi _{f}=0\), so that the model under the null is still a random walk signal plus white noise, while the signal under the alternative is an Ima(1, 1) with moving average coefficient \( \psi _{f}\).

In this case, the stationary model is

Hence, it is easy to see that

Similarly, the spectral density of \(\Delta y_{t}\) will be

The partial derivatives of this spectral density are

Under the null of \(H_{0}:\psi _{f}=0\) those derivatives become

which confirms that (34) also holds for this model.

Let us now try and isolate the singularity in a single parameter by using the same procedure as in the previous section. First, we replace \(\sigma _{f}^{2}\) and \(\sigma _{u}^{2}\) by \(\gamma _{\Delta y\Delta y}(0)\) and \( \gamma _{\Delta y\Delta y}(1)\). Thus, it is easy to see from (36) and (37) that

which are well defined as long as \(\psi _{f}\ne 1\).

With this notation, the spectral density becomes

The derivatives with respect to these new parameters are